Amanda Erhard (aae25@pitt.edu) and Sovay McGalliard (slm125@pitt.edu)

On the battlefield, teamwork is paramount. When surrounded by an enemy, a unit’s ability to work together efficiently and confidently is the most important factor in victory. A smaller, outnumbered unit may be more successful than a larger group if they have better communication skills. Technology has been increasingly utilized in order to improve communications between separate units to increase effectiveness in battlefield situations. Multi-sensor data fusion (MSDF) technology eliminates uncertainty so no single unit is isolated. This occurs through constant information sharing between field units and the base station, all in different physical locations with different perspectives. A complete picture of instantaneous action is available constantly. Surprise attacks would not be as effective as past attempts, since more enemy locations would be known. In the future, MSDF systems should function autonomously, performing similarly to the character R2D2 in Star Wars. R2D2 demonstrates sophisticated usage of MSDF. He processes data and simultaneously makes inferences to allow him to respond in a manner that emulates a personality. A similar real world example of what may be possible in MSDF technology is the use of robots in medical applications. Robotic algorithms are advancing quickly and eventually, it may be possible to eliminate human hands from the operating room in order to prevent human error. The challenge with MSDF lies in obtaining as much information as possible while determining the accuracy of that information [1]. Engineers face that same challenge when designing MSDF systems for information compilation. The goal of MSDF technology is continuously to gather and re-evaluate the information available. Autonomously, the system should compare and contrast old and new input. The system should also have the ability to make inferences based on the system function’s specified parameters and be able to present the information to a human user. In turn, the human operator then uses the output from the multi-sensor data system to mobilize.

Two types of knowledge relied upon in multi-sensor data fusion technology (MSDF) are data and information. The two terms appear synonymous to knowledge used in MSDF; however, data and information originate from different sources [2]. Sensors obtain data whereas information is already available in existing databases. The Department of Defense’s Data Fusion Group in the Joint Directors of Laboratories (JDL) created an early definition of an MSDF’s function [2]. JDL describes the method from a military perspective as a “multilevel, multifaceted process dealing with the automatic detection, association, correlation, estimation, and combination of data and information from multiple sources” [2]. This JDL definition states that MSDF collects and combines data from many sensors to draw conclusions about the current conditions of an area under observation. A more general explanation describing the function of MSDF states, “in the context of its usage in the society, it encompasses the theory, techniques and tools created and applied to exploit the synergy in the information acquired from multiple sources (sensor[s], databases, information gathered by humans, etc.) in such a way that the resulting decision or action is in some sense better (…) than would be possible if any of these sources were used individually without such synergy exploitation” [2]. This definition originates after the JDL explanation and provides a broader view of MSDF’s varied abilities. This later perspective allows for the technical differences between data and information by listing sensors and databases both as sources of knowledge. Raw data is obtained from the MSDF network sensors. The sensors collect data about the environment by measuring different physical characteristics of the target area. Multiple sensors also measure the same physical characteristics of the observed area to ensure accuracy [2]. Information provided by a database assists the MSDF in identifying the goal as specified by a human user’s parameters. However, raw data undergoes analysis and combination with other types of raw data to become new information for the MSDF to use to draw more accurate and detailed conclusions. The final output of MSDF systems is an accurate piece of dynamic intelligence that gives the user more information than could be gained by looking at the raw data from the individual sensors. This information/data fusion process encompasses data, information, sensors, and logic providing accurate inferences to be used by humans for strategic decisions in warfare or civilian life [2]. Different interpretations of MSDF functions allow for use of this process in many applications. Since the JDL was one of the first groups to define common MSDF vocabulary, early uses of MSDF were military-related [2]. A current military application of MSDF is the United States’ Navy’s Cooperative Engagement Capability (US Navy’s CEC) system [1]. The CEC provides the Navy with tracking and air defense capabilities [3]. Another usage of military MSDF occurs with the Ground Based Mid-Course Defense Ballistic Missiles Defense System (GMD). GMD uses radar to detect nuclear warheads’ trajectories in combination with ground-based interceptor missiles to prevent the warheads’ detonation [1]. The US military is also working on a prototype called the Guardian Angel Project, used to sense improvised explosive devices (IEDs) in war zones [10]. MSDF is so versatile that military and civilian uses can be determined. In civilian life, robotics incorporates MSDF for robotics [3]. A future goal of this robotic technology will be incorporated in surgeries [3]. The general idea behind the MSDF process involves sensors, a database of known information, a process for determining which combinations of data and information are reliable, and an area under study. This broad-spectrum formula allows MSDF technology to be universally applicable. Determining the specific function of MSDF requires the user to define the context in which the MSDF will be used; e.g. what provides raw data and what constitutes information.

The physical aspects of MSDF technology are the sensors responsible for raw data collection and transmission throughout the system. A characteristic of the MSDF system is that of reliability under conditions humans cannot endure. These sensors therefore, must be capable of enduring extreme stresses such as extreme temperatures, high winds, extreme pressure, storms, and fires. In military applications, sensors must be able to operate without enemy detection, perhaps while hidden from view, or from long distances. A major engineering challenge is designing these durable sensors. However, no matter how resilient the sensors’ physical covering, other aspects may contribute to individual sensor failure in the system [2]. Energy sources must be decided when designing MSDFs to ensure the system will not fail from lack of energy. Also, sensor placement must be determined so that sensors do not interfere with other sensors’ data transmissions. MSDF information must contain three basic characteristics in order to provide the most accurate data: redundancy, cooperation, and complementarity. For redundancy, a few sensors must measure the same critical aspects of the observed area to provide the user with accuracy. To achieve information cooperation, all aspects of the observed area must be measured. Combining different types of measurements of an environment using sensors, such as motion sensors and photo sensors, for example, result in complementary information [2]. Design challenges are inherent with MSDFs. Engineers must design the strongest physical covering for sensors, sensors’ energy sources, and sensors’ placement while minimizing the amount of sensors used. Also, the minimum amount of sensors must be able to collect the maximum amount of data possible while the whole system remains undetected. Using the minimal amount of sensors possible in an MSDF system saves fossil fuel, allowing MSDF to help sustain the planet. Another dilemma is that each MSDF must specifically be tailored to the environment in which that MSDF will be used.

Data fusion refers to the use of techniques that combine data from multiple origins and gather that data to draw conclusions that are simpler than the original combined data. The hypothesis pertaining to this method is that drawing data from multiple sources is more efficient and potentially more accurate than having humans draw conclusions by reading data from each of the individual sources. These data fusion systems are categorized as low, intermediate, or high depending on the processing stage in which fusion takes place [4]. Data fusion is a method of data integration followed by reduction or replacement. Understanding data fusion requires a preliminary explanation concerning data integration. Data integration is the method of pulling together information from multiple sources in reference to a global attribute from multiple sources. It is not a singular problem but rather a series of interrelated problems that include extracting and cleaning data from the sources, creating a unified format for the integrated data, transforming data from the sources into data compatible with the unified format using “wrappers”, and posing queries to the unified format [4]. Let us examine two data sets, α and β. Data set α has points p1-p10, coordinates (x1, y1, z1) through (x10,y10, z10), and attributes a1, a2, a3, a4. Data set β has points p1-p10, coordinates (x1, y1, z1) through (x10,y10, z10), and attributes b1, b2. Say we wanted a new integrated data set that had attributes a3, a4, b1, b2. If we were to combine these data sets through very simple data integration, we would create a third data set, σ. Data set σ would have points p1-p10, coordinates (x1, y1, z1) through (x10,y10, z10), and attributes a3, a4, b1, b2.

|

|

|

| TABLE I | TABLE II | TABLE III |

For a simple example, we can use a reference point of a city. The desired information could be crime statistics, average temperature, rainfall, and population. It is impossible to gather all of this information from a single source, so the multiple sources would be the individual databases that gathered this information. The original method for data integration was to create one data warehouse combining all of the information from all of the databases that was periodically updated. This involved creating one viewing frame (such as a spreadsheet or some sort of digital map) and finding a way to have the data displayed easily in an understandable sense. The difficulty associated with this is that each of the sources will have the data catalogued under different frames of reference. For example, crime rate and population will both be catalogued yearly or perhaps monthly, while temperature will be catalogued by a specific day over a span of many years. One difficulty is displaying crime rate, population, and temperature on one screen. Another problem is that the data must be put through a “wrapper”, code that transforms data from different languages into a unified format [5]. Once this is attained, the data will continually be compounded into this data warehouse. However, this method makes maintaining this database difficult as time passes because all of the information will be displayed at once. After a while, the data will overload the warehouse and then memory storage becomes an issue. An inefficient solution involves storing the data already on other databases in yet another warehouse because valuable memory space is wasted. A far more efficient process is simply to link the databases to one another through a singular “virtual database” that works online and allows the viewer to select a specific timeframe, displaying the information requested as opposed to uploading everything. This method is the current model for simple data integration, and this prevents doubling the amount of memory required when compiling information. This system is also more effective when adding another data section because only an adapter and a new wrapper need constructing instead of manually integrating an entirely new data set. These data integration systems are defined as a triple, "<"G, S, M">" where G is the global schema, S is the set of heterogeneous source schema, and M is the mapping that tracks queries from G to S. The two steps in schema mapping are data exchange and data integration. Data exchange occurs where the source schema are put through wrappers and formatted into data structured under the target schema [5]. Data integration is the step in which queries are made from the global or mediated schema to the source schema. In this step, the user is able to make and delete queries via the unified format interface in order to edit or simplify the data to fit their needs. Data fusion becomes more complicated as more levels are added. The different levels of data fusions are categorized as low (information/data fusion), intermediate (feature fusion), and high (decision fusion). The low and intermediate levels of data fusion are almost synonymous with data integration. These levels combine several sources of raw data into a new source of data. Feature fusion extracts certain features from several sources to create a new data set with specific properties pulled from the original data, clearing out some of the unnecessary information. Decision fusion uses classifiers or neural nets on a set of data to provide a better and unbiased result. Support Vector Machines (SVMs) with various kernels are an example of such a neural net [6]. SVMs are non-probabilistic binary linear classifiers used for classification and regression. A standard SVM takes a set of data and predicts into which of two possible categories the input should be sorted. Given a set of examples where each is specified to belong to a given category, an SVM training algorithm builds an equation that predicts under which category a new unmarked example falls. If we imagine a set of examples to be points in space, one area in space would be classified as A and another area in space would be classified as B. If a new example falls in between these spaces, the SVM would be able to make a prediction into which category the new data should fall.

FIGURE 1 [6]

A data set could be sorted into useful information and extraneous information and inputted into a standard SVM in a way that when new data arrives, the SVM will automatically filter out information predicted to be extraneous. This is a quick and efficient way to edit out unnecessary information without requiring a human for these painstaking decisions.

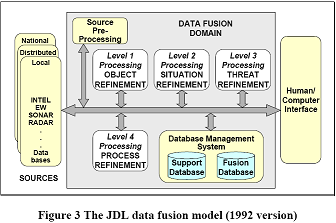

The JDL data fusion model is the most widely accepted and adopted functional model for data fusion. Developed in 1985 by the US Joint Directors of Laboratories Data Fusion Group, this model is still being revised to deal with the problems of introducing a human into the loop. This model divides the data fusion problem into four different functional levels. There are many different versions of the JDL fusion model and all must be specified for use in a particular application. We will discuss the JDL model in terms of target tracking in keeping with this paper’s military focus.

This level involves data registration, data association, position attribute estimation and identification. This requires relating schema and putting related schema into association hypotheses, or “tracks”. In this level, intermediate data (feature) fusion is used to sort the data into categories by type using neural nets such as the SVM [7].

This level reconfigures the information that has been refined in terms of warnings, action plans, and inferences and their indications about the distribution of forces and information. Algorithms are used to decide whether and in what way objects are likely to act in hostile manners [7].

Also called “threat assessment”, this level of refinement assesses the threat posed by the enemy being tracked. This includes situation, knowledge, and decision awareness, as well as the ability of friendly forces to engage the enemy effectively [7].

This stage is an ongoing assessment of the other fusion stages. At this point there is usually a human component executing the decision-making by adjusting parameters and ensuring that data acquisition and fusion is being performed for optimal results. At this point the situation and threat assessment will be displayed and the user then decides how to proceed [7].

FIGURE 2 [7]

These levels can be generalized to apply to situations other than target tracking such as land mine detection, storm tracking, and robotics.

Military MSDF applications join soldiers with a larger knowledge base to ensure a mission’s success. The US Navy’s CEC is a wireless network of ship and sensor systems that combines sensor data to provide each cooperating unit with real time updates from surrounding units. This network was originally created by Johns Hopkins University’s Applied Physics Laboratory and was later modified to be useful in military engagements [8]. Under CEC, the Navy’s fleet is provided with three key new capabilities. The first capability is to enable multiple ships, aircraft, and land-based air defense systems to have a consistent, precise and reliable air track picture of targets. This network also allows combat threat engagement decisions to be coordinated among the battle group units in real time to prevent misinformation or miscommunication resulting in a poor decision. Finally, the CEC enables distributed targeting information. If a ship or aircraft is not equipped with tracking systems or if a radar system is malfunctioning, the units can still engage a target aircraft or missiles successfully using surrounding units’ radar and tracking. These capabilities allow the US Navy to engage very difficult targets successfully, especially in situations where equipment may be compromised by bad weather or sensor and communication jamming. In such a situation, a single aircraft may be unable to engage an enemy target using its limited sensors. However, using the CEC allows the aircraft to harness the collective information and capabilities of multiple sensors and weapons at different locations. This allows the battle unit to overcome individual system shortfalls or unit location problems by networking all radars so they work as a single “virtual” battle group. The CEC is designed so that even if large portions of the radar are unusable or jammed, the best possible image will be compiled using the information available. This provides a very steady image and prevents poor signal from destroying the system’s imaging capabilities. Combining as many resources as are available protects more soldiers from death or serious physical injury. These capabilities are obtained by the development of a new, extremely reliable Data Distribution System (DDS) [8]. It uses a C-band data link between units to ensure connectivity when poor natural environmental conditions and jamming conditions exist. These qualities are required because remote fire-control-quality radar data is being fed into the fire-control loop of the engaging units. If this data were lost during an engagement, the aircraft would lose its ability to track air missiles in flight and the consequences would be severe. The CEC processing board also developed processing architecture in the Cooperative Engagement Processor (CEP). This processes the thousands of radar measurements that are distributed around the battle group by the DDS each second. CEP uses commercial off the shelf (COTS) microprocessor boards that are mounted inside of a reinforced cabinet [8]. The upgrades increase processing speed while maintaining the same computer program architecture.

GMD is the US military’s system for intercepting incoming warheads in space. It is a major component of the US missile defense strategy and aims to protect against ballistic missiles and intercontinental ballistic missiles. Protecting and maintaining opportunities for civilians to have comfortable lifestyles is the military’s primary function. GMD is funded by the US Missile Defense Agency, and operations and controls are executed by the US Army with support from the US Air Force [9]. The system is made up of ground-based interceptor missiles and radar created in order to target incoming warheads in space. Many different components work together to successfully detect and eliminate incoming missiles the military may otherwise not be able to identify in time to eradicate. The first step is the initial detection of a ballistic missile launch. The US military has gained the ability to accomplish this by creating a constellation of Defense Support Program (DSP) satellites that holds geosynchronous orbit above the earth. These satellites have the ability to scan a surface for infrared flares that are characteristic of a ballistic missile launch. Ballistic missiles have detective windows from the time they break cloud cover to the time their motors burn out approximately 200-300 seconds later [9]. Once the missile is detected, the next step in the data fusion process is for the GMD program to find the precise trajectory of the missile. The original detection will give an approximate trajectory. However, more accurate guidance systems track the missile after rocket burnout and guide an interceptor to the kill range. In theory, an X-band radar system would be able to track the missile warhead after rocket burnout, but this $900-million technology is still in development in the Gulf of Mexico [9]. This X-band radar is needed to differentiate between the actual warhead and the rocket debris cloud that travels with the warhead. After this is attained, a computer system would be able to calculate the rocket’s exact trajectory and create a new counter trajectory for a warhead to intercept the enemy’s and cause them both to detonate safely in space. When this system is perfected, a singular data fusion system will be able to identify, track, assess, and eliminate threats with very minimal human interaction needed. Humans will oversee the results once a threat has been detected in the preliminary stages. In the future, the computer may possibly make more accurate, efficient decisions than a human ever could. This GMD system then would operate autonomously.

The Guardian Angel Project (GAP) is a prototype for a branch of technology similar to the GMDs. What the GAP proposes to do is detect improvised explosive devices (IEDs) in dangerous parts of Iraq and Afghanistan, protecting more soldiers from unnecessary death or injury. This represents an effort to use imagery and near imagery sensors to look for indeterminate targets. The sensors involved in this technology include infrared and visible-light imaging radars, lower-frequency ground penetrating radars, multi-spectral sensors, and laser-based sensors [10]. By combining low accuracy sensors, the Guardian Angel software will be able to create clearer pictures of areas being scanned for threats. The key idea behind Guardian Angel is to overlay and combine imagery and near imagery data from many different sensors over different passes of time to determine any deviations from one time pass to another that would suggest changes to the scene. These may include disturbed soil, vehicle tracks, holes filled in, or objects moved [10]. The interesting thing about the Guardian Angel algorithm is that it will run on a standard laptop computer which allows mobile analysts to track the information compiled. This is also a great way to test out new equipment and sensors for accuracy without destroying a mission. A sensor is simply added into the system and the data collected is analyzed for correlations with the other information given. By having multiple sensors contribute data, there is less likelihood of a false alarm due to faulty information.

Data fusion, or more specifically, data integration, has been an integral part of many civilian activities for years. When large corporations are required to compile data coming from various branches or wish to create algorithms to predict their next quarter’s sales, data integration/fusion is used to do this quickly and efficiently. Data fusion is used to compare the home company’s profits and marketing to that of other competing companies in business intelligence. The oldest, most successful use of data fusion is in geospatial applications. Geospatial science is the technology and algorithms applied when mapping the earth’s and/or ocean’s features. Attributes like altitude, type of terrain, average temperature, average rainfall, ocean depth, water salinity, and aquatic life must all be brought together after the information is collected from many different sources. This application is relatively simple because all of the data is easily grouped by latitude and longitude; there are no difficult global schemas to create. Once this information is supplied, MSDF algorithms make inferences that are able to find places such as ideal habitats for rescued wildlife or prime salmon and tuna breeding grounds based on such input as pollution levels. These algorithms are severely more complicated, but they are also very useful. Multi-sensor data fusion has fewer applications outside of the military world than general data fusion. However, one application MSDF works well with is robotics. Sophisticated frameworks are needed to embody the intelligence desired in a robot as those systems become more complex and integrated. Multi-sensor data fusion technology is useful in this application because it allows robots to react with their environments differently based on the information received from different sensors [3]. Programs such as the SVM allow the robots to interact effectively in different situations without the necessity of a human controller. This is ideal for robotics because the end objective is to program robots that can operate smoothly, effectively, and autonomously. Currently, at the University of Jaen in Spain, engineers are successfully implementing MSDF technology for the use of a robotic arm [3]. This arm is used for tasks such as selecting particular objects from a group, lifting objects, and displacing various items. The arm is equipped with the following sensors: wrist force/torque sensors, vision sensors, an accelerometer, heat sensors, and radar. Multi-sensor data fusion technology enables the arm to determine the target from a group of objects, to ascertain distance from the target, and, once the target is reached, to determine if its systems can manage the object’s weight. Robotic arms similar to these may one day have biomedical uses and become programmed to perform procedures such as examinations or surgeries. Although not that far in development currently, medical researchers are hopeful that someday machines such as these will have the ability to prevent human error and loss of life. One way surgical robots can prevent loss of human life is by decreasing patients’ exposure to disease-causing bodies.

Critical situations such as battlefield conditions or an environmental catastrophe involve continuous input of new information. Commanders need to know the most recent statuses about enemy positions and strength or environmental conditions as well as their own positions and strength. Strong communication saves more lives. Multi-sensor data fusion systems are one method toward achieving continual updates to make the most profitable decisions on a per instance basis.

Changes in warfare methodology and an increased amount of large-scale environmental disasters call for re-evaluation of multi-sensor data fusion systems for the future [11]. Trends show that enemies are beginning not to be identified by observations based only on physical attributes such as geographic location. Targets may be found all over the world, without obvious physical characteristics that allow them to be tracked. Future versions of modern data collection techniques and sensing information should be adapted accordingly. Specific individuals must be identified by name or by action as the enemy. However, the new technology that is today’s current multi-sensor data fusion systems measure physical characteristics to provide location of threats and cannot measure name or action. Futuristic multi-sensor data fusion systems could take into account “[t]wo new major sources of [data] that have previously been relatively neglected: human observations and Web-based [data]” [11]. Since data from the Internet is available to anyone, upcoming data fusion systems could access that data without problems. Entertainment websites such as YouTube may provide crucial information to these prospective data fusion systems necessary for target identification [11]. The challenge lies in how the computer, based upon interpreting characteristics obtainable through measurement and mathematics, will be able to interpret humans’ inputted information on such websites in a context that humans controlling the system will be able to use for decision making. Computers are unable to identify humans’ motives and lies, so humans will become more involved with data fusion for identifying threats. Humans would become sensors providing more input to data fusion systems to analyze others’ motives [11].

The “human landscape” needs to be understood for outside parties to function in unfamiliar areas during warfare and when providing quick disaster relief. This human landscape consists of “individuals, groups, populations, organizations, and their interactions” [11]. A military perspective necessitates a thorough understanding of the opposing side’s traditions and organizations for better tactical preparation. The population is more willing to interact with those who make them feel safe. Practicing local customs helps to create that trust and thus to gain allies who help the military sooner identify their target. Administering aid for disaster relief becomes smoother when first responders understand exactly what the population needs in the civilian sector [11]. Factors such as average age and salary of those who live in a particular area determine how long that area needs help until the recovery process starts. One potential solution to this modern necessity involves knowledge engineering, a process of obtaining as much information about a particular people from experts studying the area. Experts provide the information that will be entered into the fusion system to allow the reasoning algorithms of each system to work. The data fusion systems’ algorithms may depend on each expert’s method of analysis. Each data fusion system would be formed from a unique thought process [11]. Also, quantifying human observations for data fusion systems to make accurate inferences is a challenge newly being explored. Limited computing powers are only beginning to interpret variables in data provided by physical sensors. Even more variables and a greater degree of uncertainty exist with human testimony [11]. Physical variables such as distance and obstacle obstruction affect eyewitnesses’ and mechanical sensors’ viewpoints equally [11]. Humans’ biases inherent in their upbringing and lifestyles also affect interpretation of an event whereas mechanical sensors contain no bias.

Newton’s third law states that for every action there is an equal and opposite reaction. Our modern world is now experiencing the effects created by engineering innovations. Modern engineering creations allow humans to have easier lives. The environment usually shoulders the burden of humans’ easier lives, however. Therefore today’s engineers must attempt to undo as much damage as possible while simultaneously slowing the Earth’s current rates of decay. Every new engineering design must be able to work with the environment while reducing human discomfort. Multi-sensor data fusion systems impact both the environmental and human aspects of sustainability. MSDF’s primary focus is minimizing the amount of energy needed for input. This is accomplished through transmission only of the most vital data collected. Passing around smaller, but more accurate quantities of data allows for efficient use of the minimized amounts of energy provided. Efficient use of energy reduces the amount of fossil fuels consumed, thus ensuring longevity of a very limited and necessary energy source. Soldiers also benefit from minimizing the amount of energy needed to power systems [12]. The body may carry loads up to one-third of the body’s weight [13]. Soldiers commonly carry about 70 kilograms of weight or more, if necessary [12]. Batteries comprise much of that load [13]. MSDF using less energy means that batteries powering the technology can be lighter. This allows the soldiers’ physical endurance levels to be extended for longer periods of time. The CEC, GMD, and Guardian Angel applications of MSDF all contribute toward protecting humans. CEC and the Guardian Angel project both specifically protect soldiers, increasing the changes that soldiers will return home without dying or major physical injury. GMD protects civilians from enemy bombs. Civilian applications of MSDF also benefit the environment and humans. Geospatial technology using MSDF will be able to track progress with improving the environment’s well-being. Progress in cleaning previously contaminated areas will be tracked, benefiting both humans and animals. Once an area is determined to be uncontaminated, life may resume there. The Earth’s land area is thus being maximized as the population continually increases. Robotics using MSDF in surgical procedures will help humans. Post-surgical patient infections are less likely to develop if an inorganic machine performs the procedure. Bacteria thrive in warm, moist environments such as humans. Viruses also affect humans. Sterilized machines do not have as many disease-causing bodies as human surgeons. Patient recovery is faster when not also recovering from an infection.

MSDF systems are necessary in today’s informed world to analyze only relevant specifics. This technology gathers as much raw data about a particular environment as possible. The raw data from physical sensors is then combined with database-provided information to make inferences about the observed area. Unnecessary data and information are discarded, leaving the human user with only relevant specifics to make a decision. The military currently uses MSDF in the Navy’s CEC system and also in the GMD program. Guardian Angel is another military-developed project with MSDF. Civilian uses include MSDF with robotics to control robotic arms. Challenges involved in designing MSDF systems include physical coverings, energy sources, and sensor placement. Each MSDF must be designed specifically to match its environment. MSDF assists humans for having an easier life and must also help Earth last longer. Emerging trends in warfare demonstrate that targets are also specific humans, not only physical objects. As MSDF systems and algorithms gain experience, precision, and complexity over time, this technology may become a fundamental part of business, military operations and civilian life. MSDF technology will increase speed, accuracy and dependability of computer systems without waste of human time and resources.

We would especially like to thank our writing instructor, Marianne Trale, for her invaluable guidance throughout this process. A special thanks to our chair, Richard Velan, and to our co-chair, Nathan Roberts, for their expert advice. We would like to thank the Bevier librarians for their guidance in directing our research. Thanks also to Bob Erhard for providing the inspiration for our paper. We would also like to thank Christine Bontempi and Zhannetta Gugel for their proofreading expertise.