| HPS 0628 | Paradox | |

Back to doc list

Supertasks

John D. Norton

Department of History and Philosophy of Science

University of Pittsburgh

http://www.pitt.edu/~jdnorton

The resolution of Zeno's paradoxes of motion depended on the idea that completing an infinity of actions is not, by itself, an impossibility. If contradictions follow after assuming that some infinity of actions has been completed, they derive from particular, additional assumptions introduced into the scenario. They do not derive from something inherently contradictory in the nature of the infinity of actions specified.

Whether this last idea is so has become the subject of extensive investigations in a new literature, starting in the last century, on Zeno like paradoxes. Supertasks are ones in which an infinity of actions is to be completed in a finite time. The repeated suggestion is that there something inherently wrong about a supertask; and the examples below explore ways in which this problem can be teased out.

For example, Max Black makes his purpose clear when he proposes the transfer machine described below.

"I am

going to argue that the expression, "infinite series

of acts," is self-contradictory, and that failure to see this

arises from confusing a series of acts with a series of numbers generated

by some mathematical law. (By an ''act" I mean something marked off from

its surroundings by having a definite beginning and end.)

In order to establish this by means of an illustration I shall try to make

plain some of the absurd consequences of talking about "counting an

infinite number of marbles." And in order to do this I shall find it

convenient to talk about counting an infinite number of marbles as if I

supposed it was sensible to talk in this way. But I want it to be

understood all the time that I do not think it sensible to talk in this

way, and that my aim in so talking is to show how absurd this way of

talking is. Counting may seem a very special kind of "act" to choose, but

I hope to be able to show that the same considerations will apply to an

infinite series of any kinds of acts."

My view is that this literature has failed to show that there is such an inherent problem. When a contradiction arises, it always seems possible to trace its origin to some added assumption that is distinct from the idea of the completion of an infinity of actions. However in the process it has produced a fascinating array of examples that may require some delicate analysis in order not to fall into confusion. We will examine them here.

There is strong literature in supertasks. A notable source is a collection of foundational papers from 1967 in W. Salmon, ed., Zeno's Paradoxes. Indianapolis: Hackett, 2001. A recent synoptical treatment is John B. Manchak, and Bryan W. Roberts, "Supertasks", The Stanford Encyclopedia of Philosophy. The name "super-tasks" was introduced by James Thomson in a 1954 paper reproduced in the Salmon volume.

How might the infinity of actions of Zeno's dichotomy cause trouble? One might think that the earlier resolution succeeded only because the actions the runner must carry out are not proper actions at all. Passing a point on a course is not really doing something that is worthy of label action. What if we require that runner has to do some definite, discrete action at each of positions listed in the dichotomy: the 1/2 point, the 3/4 point and so on.

We might imagine that the runner stops at each of these points and plants a flag. What makes the planting a discrete action is that it requires some non-zero time to perform. Let us say that it takes one unit of time for each flag planting.

The table tracks how much time is needed for the entire process:

| Action | time |

| Traverse 0 to 1/2 point | 1/2 |

| Plant flag at 1/2 point | 1 |

| Traverse 1/2 to 3/4 point | 1/4 |

| Plant flag at 3/4 point | 1 |

| Traverse 3/4 to 7/8 point | 1/8 |

| Plant flag at 7/8 point | 1 |

| etc. | etc. |

That is, the total time needed is:

1/2 + 1 + 1/4 + 1 + 1/8 + 1 + ...

This total time has two parts. The times for traversals is

1/2 + 1/4 + 1/8 + ... = 1

and (as we saw in the analysis of the dichotomy paradox) sums to unity. However it is otherwise with the flag plantings. They require time:

1 + 1 + 1 + 1 + 1 + ... = ∞

That is, the total time needed to complete this staccato

run is infinite. That means that it cannot be completed in any

finite time

It is easy to see that nothing intrinsically troubling about the infinity of actions has been uncovered here. The source of the infinity is the decision in the specification of the staccato run to assign unit time to the flag plantings. Since there are an infinity of them, all the plantings together require infinite time.

The simple remedy is to replace these unit times by some suitable acceleration in the flag planting. What if the times for successive plantings decrease geometrically as 1/2, 1/4, 1/8, ...? Each flag planting would require some non-zero time, while the runner pauses at the appropriate point. That is, we have preserved the discreteness of the action of the planting of a flag.

We now have for the times required:

| Action | time |

| Traverse 0 to 1/2 point | 1/2 |

| Plant flag at 1/2 point | 1/2 |

| Traverse 1/2 to 3/4 point | 1/4 |

| Plant flag at 3/4 point | 1/4 |

| Traverse 3/4 to 7/8 point | 1/8 |

| Plant flag at 7/8 point | 1/8 |

| etc. | etc. |

That is, the total time needed is:

1/2 + 1/2 + 1/4 + 1/4 + 1/8 + 1/8 + ...

= (1/2 + 1/2) + (1/4 + 1/4) + (1/8 + 1/8) + ...

= 1 + 1/2 + 1/4 + 1/8 + ... = 1 + 1

2

This modified staccato run can be completed in finite time. Requiring that the infinity of actions in the dichotomy be discrete actions each requiring some non-zero time need not lead to an infinite time being needed for the entire process.

The staccato run is an instance of one type of effort to show a problem with the completion of an infinity of actions. These efforts look at the sequence of actions themselves and try to find some difficulty. A more promising approach to finding trouble concerns not the actions themselves, but the final state achieved by the system. Consideration of this final state has proven more fertile in identifying intriguing puzzles (although I do not believe any ultimately succeed in showing that completion of an infinity of actions is impossible by its nature).

The best known of these is James Thomson's lamp. We have already seen this paradox earlier in the "Budget of Paradoxes." To recall the account given there:

----------------------------------------

A lamp is switched on a one minute to midnight; off at 1/2 minute to midnight; on at 1/3 minute to midnight; off at 1/4 minute to midnight; and so on all the way to midnight. By midnight, an infinity of switchings has been completed.

single lamp from https://commons.wikimedia.org/wiki/File:Idea_lightbulb_silhouette.svg

Here's the sequence in a table.

| Time before midnight | lamp |

| 1 | ON |

| 1/2 | OFF |

| 1/3 | ON |

| 1/4 | OFF |

| etc. | etc |

The puzzle: At midnight, is the lamp ON or OFF?

It cannot be ON, since each moment at which the lamp is switched on is followed by another moment at which it is switched off.

It cannot be OFF, since each moment at which the lamp is switched off is followed by another moment at which it is switched on.

----------------------------------------

The paradox resides in a contradiction. The lamp must be either ON or OFF at midnight, but, we infer, it can be neither.

The source of the contradiction lies in the impoverished dynamics assumed for the state of the lamp and its switching. It is what I shall call:

Continuity dynamics: the state of the lamp at any given time is the state to which it was switched at the moment of the LATEST SWITCHING PRIOR to the given time.

This simple continuity dynamics is adequate for our normal engagement with lamps. However it is inadequate for the contrived supertask scenario envisaged by Thomson.

All we can conclude from the analysis is that simple continuity dynamics is unable to assign any state to the lamp at midnight. It fails to apply because:

(a) the dynamics requires for its application that

there be a latest switching prior to the time in question; and

(b) by construction, there is no latest switching prior to midnight.

That inadequacy is different from saying that the

lamp can be neither ON nor OFF. All it is saying is that the continuity

dynamics cannot inform us of the state of the lamp at midnight or

even if it has a state.

An analogy might help. I tell you that there is a lamp on the table in front of me. I ask "Is it ON or OFF?" The appropriate answer is that I have not given you enough information to decide. It may be either ON or OFF; or I may even be lying so that there is no lamp at all. The information given is too little for you to know. Correspondingly, continuity dynamics gives us too little information to determine the state of the lamp at midnight.

For those who find this resolution too hasty, we

shall return in a moment with a physical model of the lamp that shows

either state compatible with what Thomson's scenario of switching

requires.

An idealized bouncing ball executes a supertask in its completion of an infinity of bounces in a finite time. The supertask itself is not especially noteworthy. There seems to be nothing paradoxical or troubling about it. That is why the example is useful. We will use it in the next section to show that the Thomson lamp supertask is also not problematic.

The idealized physics of the bouncing ball follows

the standard idealization in physics text books. When a ball bounces, the

speed after the bounce is some constant fraction of the speed before. This

ratio is the "coefficient of restitution" k

and it lies somewhere in the range of 0 to 1.

This constant coefficient enters into two relations:

Time of next bounce = k x time of first bounce

Height of next bounce = k2 x height of first bounce

The details for those who want them are below.

If we choose a value of k=1/2 and set the time for the first bounce as 1, then the next bounce needs time (1/2)x1 = 1/2 and the next needs (1/2) x (1/2) = 1/4, and so on. There are similar results for the heights. We find quickly that the times and heights of subsequent bounces will be:

Times: 1, 1/2, 1/4, 1/8, ...

Heights: 1, 1/4, 1/16, 1/64, ...

With this schedule of bounces, an infinity of bounces will be completed in finite time:

Time to complete all bounces = 1 + 1/2 + 1/4 + 1/8 + ... = 2

Other values of k will give a different schedule of times and height. As long as 0<k<1, the schedule of times will always be such that an infinity of bounces is completed in a finite time.

The figure shows the bounces drawn

to scale for k=3/4, which means that the infinity of bounces

completes in 4 units of time, where a single unit is the time to complete

the first bounce. The larger value of k means that the heights do not

decay as fast as they would with k=1/2, which makes them easier to see in

the figure. The ball is assumed to be moving horizontally and that the

horizontal motion is unaffected. Thus the horizontal displacement is a

graphical replacement for time and infinite sequences of bounces will be

completed while the ball has moved only a finite distance horizontally.

The

coefficient of restitution k for the ball's bounce is the ratio of the

speed before the bounce v and its speed afterwards, V:

V=kv

Since the kinetic energy of the ball at the bounce is proportional to

(speed)2, we have a similar ratio:

Energy after = k2 energy before.

In a homogeneous gravitational field, the height to which the ball rises

in each cycle is proportional to this energy. The potential energy of

height is just mass x g x height. We have for the height h of a bounce and

the height H or a subsequent bounce that

H = k2 h

The time t to fall from a height h is governed by the familiar h=(1/2)gτ2.

Inverting we have that the time τ to fall from the apex of a bounce is

τ = √(2h/g)

The total time for a bounce is just twice this, since the time to rise to

the apex equals the time to fall from the apex. If t is the total time for

one bounce and T the total time for the next, we can combine these results

to give. T/t = √H/√h = k. That is:

T = k t

For an infinite sequence of bounces with 0<k<1, the successive times

of the bounces will be 1, k, k2, k3, k4,

... and the total time will be the sum of the geometric series:

1 + k + k2 + k3 + k4 + ... = 1/(1-k)

The resolution above of the Thomson lamp supertask problem was that the lamp could be either on or off at midnight. The schedule of switching leaves open which it might be.

The secure this resolution, we need to show that either end state--on or off--is consistent with the schedule of switching. That is, we need to show that adding one or other of the end states as an assumption does not enable us to deduce a contradiction. Demonstrations of consistency of sets of propositions can be difficult or even impossible in principle. However there is a widely used strategy that does the job well enough. This is the idea of a relative consistency proof. In it, we show that the system in question can be recreated within another system that we presume to be consistent. The consistency of the first system then assures us of the consistency of the system in question.

This strategy was employed to noteworthy success in the nineteenth century to show the consistency of non-Euclidean geometries. In a non-Euclidean, spherical geometry, it is possible to have triangles whose angles sum to three right angles. Euclidean geometry requires the sum of the angles of all triangles to be just two right angles. Is the non-Euclidean geometry consistent? How can we be sure that we cannot deduce a contradiction in it? It turned out that this non-Euclidean, spherical geometry corresponds in its basic laws to the geometry of great circles on spheres in a Euclidean space. Any construction in the first corresponds to one in the second, and vice versa. If the geometry of Euclidean space is consistent, then so is the geometry of great circles on spheres and thus so is the non-Euclidean spherical geometry.

The figure shows a triangle made of great circles on a sphere such that the sum of its angles is three right angles.

Another example that may be familiar to some of you is the consistency i, the square root of minus one. Complex arithmetic employs quantities that are the sum of ordinary real numbers and multiples of i. All values of complex numbers form a two dimensional space and manipulations within it can be recreated as ordinary algebraic manipulations of a two dimensional surface with two real coordinates. In so far as we accept the latter system is consistent, we must also accept the consistency of complex arithmetic.

We apply this technique to establish the consistency of the two different end states proposed above for the Thomson lamp? To do so, we will consider two switching mechanisms that employ idealized electric circuits. In place of the usual switch, the mechanisms will employ an electrical contact, such as shown in the figure.

In its first "ON" setting, when a conducting metal ball is resting on the two ends of the contacts, an electric circuit is completed and the lamp is on. If the ball does not touch the contacts, the lamp is off.

In the mechanism's second "OFF" setting, the situation is reversed. When the metal conducting ball is resting on the contacts, the lamp is short circuited and switched off. If the ball does not touch the contacts, the lamp is on.

If the ball is the bouncing ball of the last section, we have a mechanism that will realize the switching schedule of the Thomson lamp. Whether the mechanism is in its ON or OFF setting, as the ball completes its infinite sequence of bounces, it will switch the lamp on and off infinitely often and in a finite time.

• If the mechanism is in its "ON" setting, then the ball will come to rest on the electrical contacts and the lamp will be ON at the end of the infinity of switchings.

• If the mechanism is in its "OFF" setting, then the ball will come to rest on the electrical contacts and the lamp will be OFF at the end of the infinity of switchings.

In so far as the switching mechanisms can be consistently implemented, we have a demonstration that either end state--ON or OFF at midnight--is consistent with the schedule of switchings required by the Thomson lamp.

This physical simulation of the Thomson lamp mechanism was conceived by Shahin Kaveh on the occasion of the conference “Norton4 Everyone?” concerning my book draft, The Material Theory of Induction. October 27-28, 2018, Center for Philosophy of Science, University of Pittsburgh. He was assisted by Bryan Roberts and the construction was carried out by Charles (Chuck) Fleishaker, an engineering technician at the University of Pittsburgh electronics shop.

The idea that something untoward happens at the end

of a supertask is illustrated even more vividly by Max Black's

transfer machine. It recreates the switching of Thomson's lamp in the

motion of a marble. (However Black's paper was written before Thomson's.

Yet somehow Thomson's example seemed to attract more attention.)

There are two trays and a marble. A mechanical device shifts the marble from left to right

| Time before midnight | shift |

| 1 | To R |

| 1/2 | To L |

| 1/3 | To R |

| 1/4 | To L |

| etc. | etc |

Once the infinitely many shifts are completed, where is the marble. We have the now familiar conundrum:

• The marble cannot end up at midnight in the left

tray, since each time it was moved there, a subsequent shift moved it out.

• The marble cannot end up at midnight in the right tray, since each time

it was moved there, a subsequent shift moved it out.

These two conclusions contradict the assumption that the marble has to be somewhere at midnight; and there are only two possibilities, the left or the right tray.

Black adds a slight wrinkle

by introducing two different machines to carry out

the switchings. We imagine that the left to right switchings are carried

out by one machine; and the right to left switchings by a second machine.

Let us say that some argument leads the operator of the left to right

machine to conclude that at midnight the marble ends up in the right tray

(for that is where the left to right machine persistently tries to place

it). Then the situation as perceived by the operator of the right to left

machine will be reversed. The operator will use the reversed argument to

conclude that the marble ends up in the left tray (for that is where the

right to left machine persistently tries to place it).

The resolution of the paradox is, in its fundamentals, the same as the resolution of the Thomson lamp supertask: the schedule of switchings prior to midnight does not fix the position of the marble at midnight. It cannot even tell us if the marble will have any position at midnight. The failure comes from the tacit use of a dynamics that falls silent in the very case Black has contrived:

Continuity dynamics: the position of the marble at any given time is the position to which it was switched at the moment of the LATEST SWITCHING PRIOR to the given time.

This dynamics suffices for the motion of bodies in

ordinary circumstances. However it fails for the contrivance of Black's

transfer machine. For, by its contrivance, there is no latest switching

prior to midnight. Thus continuity dynamics fails to specify the location

of the marble or even if the marble has any location at all. The

contradiction is eliminated since the two inferences of the conundrum

above apply only to times prior to midnight.

A helpful way to see this difficulty is to plot a spacetime diagram of the motions of the marble. As is standard in these diagrams, time is represented "up" the figure and space "across."

The figure shows clearly that the marble position oscillates faster and faster between the two positions L and R and does not settle down to any definite position at the time approaches midnight.

This continuity dynamics derives from a stronger, natural assumption about bodies: that their trajectories in spacetime are continuous; or equivalently, when we plot their positions in spacetime we recover continuous curves. Continuity here is a notion that has been given careful attention by the mathematicians of the nineteenth century. It is defined at a point on a curve and the curve overall is continuous if the curve is continuous at every point.

The relevant notion for the trajectory of the spacetime diagram is continuity from below, that is, continuity from earlier times in this spacetime diagram. The trajectory is continuous from earlier times at some time if the limit of positions of earlier times is defined and agrees with the position at that time. An example of a trajectory that is continuous at midnight is shown in the figure below:

The bouncing ball above is a variant of this motion that similarly has a definite position at the conclusion of infinitely many turnings.

This notion of continuity can give us a more general version of the continuity dynamics defined above:

More general continuity dynamics: The

position of the marble at any time is the limit of its positions at prior

times.

The motion of the marble does not conform with this

more general continuity dynamics at midnight. For the marble's position

prior to midnight approaches no single limit position.

We can return to the accelerating spaceship paradox of the earlier chapters and use the ideas of the Black transfer machine to create another somewhat more surprising result. Recall the formulation of the paradox:

A spaceship leaves earth, traveling at two units of speed. It has a very powerful rocket motor and can repeatedly double its speed. It does so quite rapidly. It doubles its speed at 1/2 minute; and again at 3/4 minute; and again at 7/8 minute; and so on for the full minute.

Here is a schedule of its speeds and distances covered in each of the indicated time intervals.

| Time interval | speed | distance covered = speed x time |

| 0 to 1/2 = 1/2 | 2 | 1 |

| 1/2 to 3/4 = 1/4 | 4 | 1 |

| 3/4 to 7/8 = 1/8 | 8 | 1 |

| 7/8 to 15/16 = 1/16 | 16 | 1 |

| etc, | etc | etc |

The result is that at time=1 and later the spaceship's trajectory is no longer anywhere in space. The totality of the distances traversed is infinite. We resist the temptation to say that the spaceship is "infinitely far away" since there are no points in space infinitely far away. Every point in space is some finite distance.

The elaboration is that we do not have just one spaceship, but an infinity of them filling all space in some direction. They are numbered ..., -2, -1, 0, 1, 2, ... Each now accelerates according to the schedule of motions just sketched. The entire space filling row of spaceships accelerates as shown in the figure below.

We know that this acceleration scheme will lead each spaceship to have acceleration such that it is no longer in space at time 1, the end of the schedule. That is now true for the totality of the spaceships. To see what this means, pick some location, such as "here" indicated in the figure. Which spaceship will be "here" at time=1 and later? We can run through the possibilities:

Spaceship 0 is not here at time = 1, since it had

left by time = 1/2.

Spaceship -1 is not here at time = 1, since it had left by time = 3/4.

Spaceship -2 is not here at time = 1, since it had left by time = 7/8.

Spaceship -3 is not here at time = 1, since it had left by time = 15/16.

and so on for all the remaining spaceships -4, -5, -6, ...

That is we conclude that there is no spaceship here at time=1. Of course there is nothing special about "here." This inference applies to any position. That is we come to the curious conclusion that space is full of spaceships for all times prior to 1.

Space is full at time 7/8.

Space is full at time 15/16.

Space is full at time 31/32.

and so on, coming arbitrarily close to time 1.

All the time they are whizzing past faster and faster...

...and then at time 1 !!POOF!!, space is suddenly empty!

Once again we can draw the spacetime diagram and confirm that all the spaceships have vanished:

At any time we care to nominate prior to time =1, the figure shows that there is a spaceship at every location in space. Then at time = 1 and later, no position has a spaceship.

While the result seems puzzling, the disappearance of all the spaceships accords with the notions of continuity seen in the Black transfer machine. Its continuity dynamics can assign no definite position to any of the spaceships at time=1 and later. The simplest case compatible with this scenario is that the spaceships no longer exist. That is only the simplest possibility. At time=1 and later, the spaceships could be anywhere in space, such as back at their starting points. The continuity dynamics is unable to tell us which. The figure above just illustrates this simplest case.

The issues raised by these supertasks may have an unexpected application: the question of what it means for arithmetic statements to be true. Grünbaum, in the paper in which he introduces the "staccato run" above begins his discussion by recalling Hermann Weyl's discomfort with completing an infinity of computations in the context of the meaning of arithmetic propositions.

Before we turn to the harder case of propositions in arithmetic, it will be helpful to think in general terms of some ways that a proposition can be true. Philosophers like to talk of "truth conditions" here. They are what has to be the case in the world if some proposition is true.

https://www.nasa.gov/sites/default/files/thumbnails/image/potw1703a.jpg

Take the proposition:

There are infinitely many stars.

The truth conditions for this proposition are obvious. It is just that, as a matter of cosmic fact, there is an infinity of stars scattered through space. There is no guarantee in modern cosmology that this is the case. If space is finite in volume, then only finitely many stars are needed to fill the roughly uniform distribution of stars in space. However we do know how things would have to be for this proposition to be true.

Now consider a different sort of proposition:

There are [fill in some number] many words in the English language.

The truth of this proposition is unlike that of the

number of stars in the universe. The truth of this proposition depends

on what we humans do. There are infinitely many ways we can

combine the letters of the alphabet to form words (assuming there is no

limit on the length). But virtually all of those combinations are not

included as words of English. To be included and thus to figure in the

count, someone has to declare it a word. Inclusion in a dictionary is one

way this can happen.

Now consider the propositions of arithmetic. Take the simple proposition:

17 is a prime number.

What is it for this to be a true proposition? What is it in the world that makes it true?

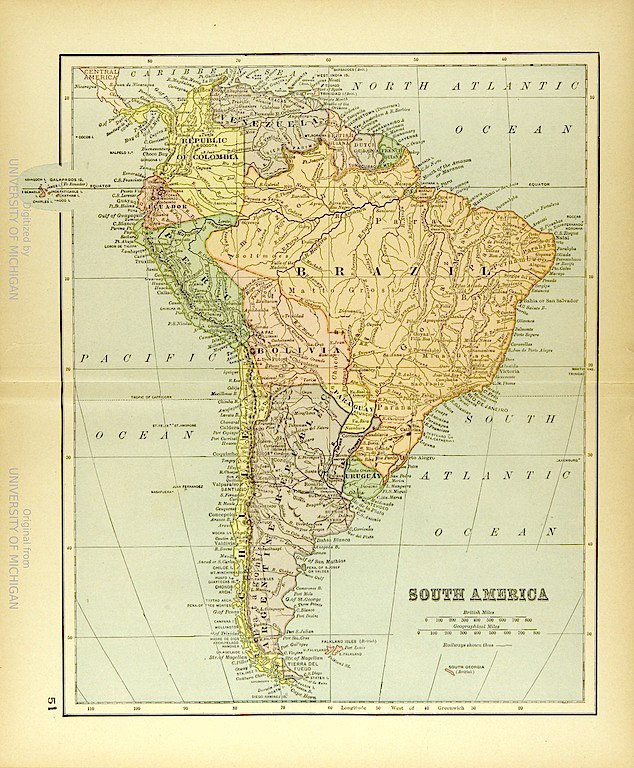

At one extremity of views is the idea that its truth conditions are akin to those of the proposition that are infinitely many stars. This is a factual matter independent of what we humans do. This is the the Platonic (or as it is often now called "realist") position. There is a realm in which numbers exist as entities. Arithmetic truths are truths about them in much the same way as the proposition "The Amazon is a river in South America" is a proposition about world geography.

https://commons.wikimedia.org/wiki/File:1894_Map_of_South_America.jpg

Many find this extreme unpalatable. Just where are these numbers? How can we know about them?

There is no easy answer to the where question. Numbers do not exist in a museum where they are arrayed in glass cases for our inspection.

The how question can be answered. We use our faculty of reason to learn about numbers. Perhaps reason transports us to an other-wordly museum of mathematics where this faculty allows us to inspect and learn about numbers.

This last answer suggests another possibility. If reason is doing the work for us, why posit the other-wordly museum of mathematics at all? Might it all just be reasoning?

At this other extremity of views, the truth conditions of the proposition lie in what we humans do. They are akin to the truth conditions of the number of words in the English language. In this vein, we might take the truth of this proposition about 17 simply to reside in the outcome of a computation. It is a human activity. We check:

2 is not a factor of 17.

3 is not a factor of 17.

4 is not a factor of 17.

...

16 is not a factor of 17.

The totality of this computation simply is what it is for the proposition

to be true.

Now comes the complication. There are many infinite facts in arithmetic. For example we know that every even number can be written as the sum of two odd numbers:

2 = 1 + 1

4 = 3 + 1

6 = 5 + 1 = 3 + 3

8 = 7 + 1 = 5 + 3

10 = 9 + 1 = 7 + 3 = 5 + 5

and so on for infinitely many even numbers.

If the truth of the totality requires that each case be computed, or at least be possible to be computed, we have arrived at a supertask, if the computation is to be completed in a finite time.

Each individual member of the totality can be computed with finitely many additions. There are, for example, only 50 odd numbers less than 100. So we need check only 50x50/2 combinations to see if any pair of them add to 100. However to check all in the infinite totality requires infinitely many additions.

If completing infinitely many actions is impossible, then this simple notion of truth in arithmetic fails.

What is an alternative that retains the centrality of human actions? It is a finitist conception of truth in arithmetic. While we cannot demonstrate the truth of the totality by computing the truth of each member individually, there is small piece of algebra that gives the result we want.

Any even number

can be written in the form "2n" for n some number.

• If n is odd, then n + n are two odd numbers summing to 2n.

• If n is even then (n-1) + (n+1) are two odd numbers summing to 2n.

This provides a general proof that any even number can be written as the sum of two odd numbers.

The details of this little piece of algebra are unimportant. All that matters is that it is a finite piece of argumentation that can be traced through in a short time. For the finitist, the truth of infinite totalities of arithmetic propositions resides solely in such a finite proof.

Does it matter? This conception makes a difference when we have arithmetic propositions for which we have no proofs. For example, it is widely accepted that "Goldbach's conjecture" is true:

Every even number greater than 2 can be written as the sum of two prime numbers:

4 = 1 + 3

6 = 1 + 5

...

12 = 5 + 7

14 = 3 + 11

...

While the conjecture has been checked for many, many numbers, we have no general proof.

According to the finitist, if this is a conjecture for which no finite proof can be given then it is not a truth of arithmetic.

This finitist conclusion is awkward since (as Goedel showed) there are many propositions of arithmetic that we could like to take as true, but for which no finite proof can be given. (We do not know if Goldbach's conjecture is one.)

So what can it mean to say that they are true? If we allow the possibility in principle of supertasks and the completions of an infinity of acts, then there is a computational answer.

The truth of Goldbach's conjecture simply resides in the possibility of a supertask computation checking each even number and finding the pairs of prime numbers that sum to it.

Check 4 at 1/2 minute to midnight.

Check 6 at 1/6 minute to midnight

Check 8 at 1/8 minute to midnight.

Check 10 at 1/10 minute to midnight.

and so on infinitely.

The truth of Goldbach's conjecture resides in a

positive check resulting for each even number in this supertask

computation.

Surely Thomson's lamp must be on or off at midnight, whether we can say which is the case. Does not that mean that the paradox persists?

Surely the marble of Black's transfer machine must be somewhere in space, whether we can say what that position is. Does not that mean that the paradox persists?

Consider the proposition "There are infinitely many stars." For it to be true, there simply have to be infinitely stars. This is a factual condition about the world about us. Can the same analysis be given for the proposition in arithmetic: "there are infinitely many prime numbers"?

June 15, September

13, 21, 2021. Acknowledgment for Thomson lamp simulation added February

10, 2022. January 25, 2023.

Copyright, John D. Norton