| HPS

2101/Phil 2600 |

Philosophy

of Science |

Fall

2022 |

Back to course documents.

Is machine learning bringing the next

revolution in science?

The single greatest transformation in science underway

now is the infusion of machine learning into almost all sciences. This

infusion is a topic ripe for analysis by philosophers of science. Is

something of foundational importance underway? If so, what is it? If not,

why is there all the fuss?

The challenge to philosophers of science is provide a

sharp formulation of the foundational issue or foundational question. This

is not an easy task. Rather it requires considerable creativity by

philosophers of science to find these sharp formulations. Only after that

has been done do these formulations appear easy or obvious.

Machine learning is infusing into almost all sciences.

An April 2017 study by the Royal Society (UK) called "The

AI Revolution in Science" lists technical breakthroughs in methods:

• Convolutional neural networks

• Reinforcement learning

• Transfer learning

• Generative adversarial networks

It gives examples of "AI as an enabler of scientific

discovery":

• Using genomic data to predict protein structures

• Understanding the effects of climate change on cities and regions

• Finding patterns in astronomical data

It lists examples of new applications to be expected:

• Satellite imaging to support conservation

• Understanding social history from archive material

• Materials characterisation using high-resolution imaging

• Understanding complex organic chemistry

Recent major discovery:

"'The entire

protein universe': AI predicts shape of nearly every known protein,"

Nature, News, July 28, 2022.

There is much celebratory "revolution" talk.

Chris Anderson, "The

End of Theory: The Data Deluge Makes the Scientific Method Obsolete,"Science,

June 23, 2008.

"The scientific method is built around testable hypotheses.

These models, for the most part, are systems visualized in the minds of

scientists. The models are then tested, and experiments confirm or falsify

theoretical models of how the world works. This is the way science has

worked for hundreds of years."

"There is now a better way. Petabytes allow us to say:

"Correlation is enough." We can stop looking for models. We can analyze

the data without hypotheses about what it might show. We can throw the

numbers into the biggest computing clusters the world has ever seen and

let statistical algorithms find patterns where science cannot."

Bryan McMahon, "AI

is Ushering In a New Scientific Revolution," The Gradient.

"But AI is more than a just powerful tool in the hands of

scientists and partner on this search. The technology is also transforming

the scientific process, automating and adding to what people can

accomplish using it. AI is ushering in a new scientific revolution by

making remarkable breakthroughs in a number of fields, unlocking new

approaches to science, and accelerating the pace of science and

innovation. As partners in discovery, AI and scientists can explore more

of science’s endless frontier together than either could alone."

Dan Falk, "How

Artificial Intelligence Is Changing Science," Quanta,

March 11, 2019

"The latest AI algorithms are probing the evolution of

galaxies, calculating quantum wave functions, discovering new chemical

compounds and more. Is there anything that scientists do that can’t be

automated?"

Is machine learning just glorified statistics?

A Google search on this question produces a profusion

of denials, but no early hit on someone saying that machine learning just

is glorified statistical analysis:

Search results, July

29, 2022

Why would anyone think that machine learning is just

glorified statistics?

Answer: because that is exactly what it looks like!

Illustration through neural nets:

|

|

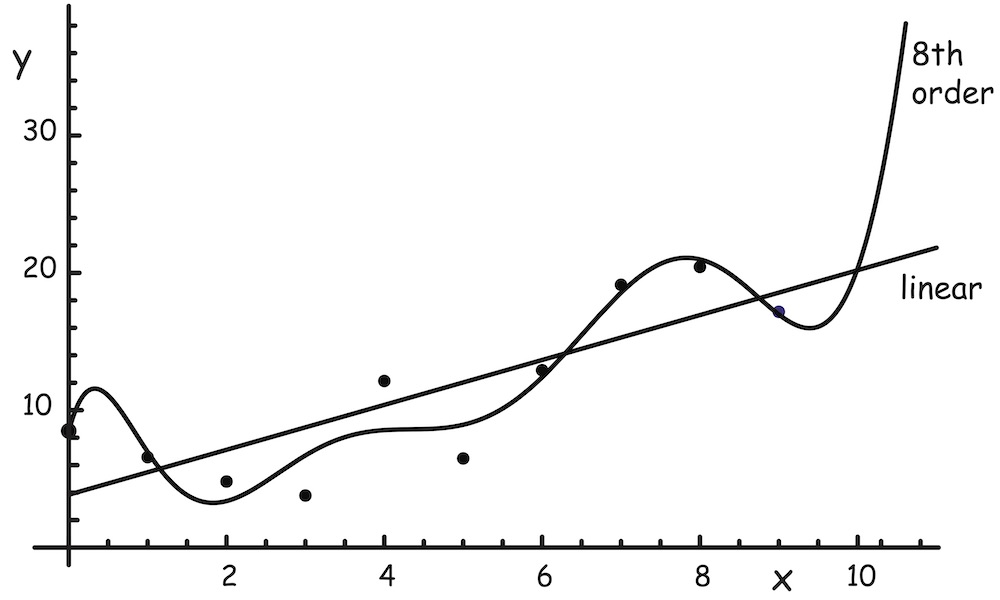

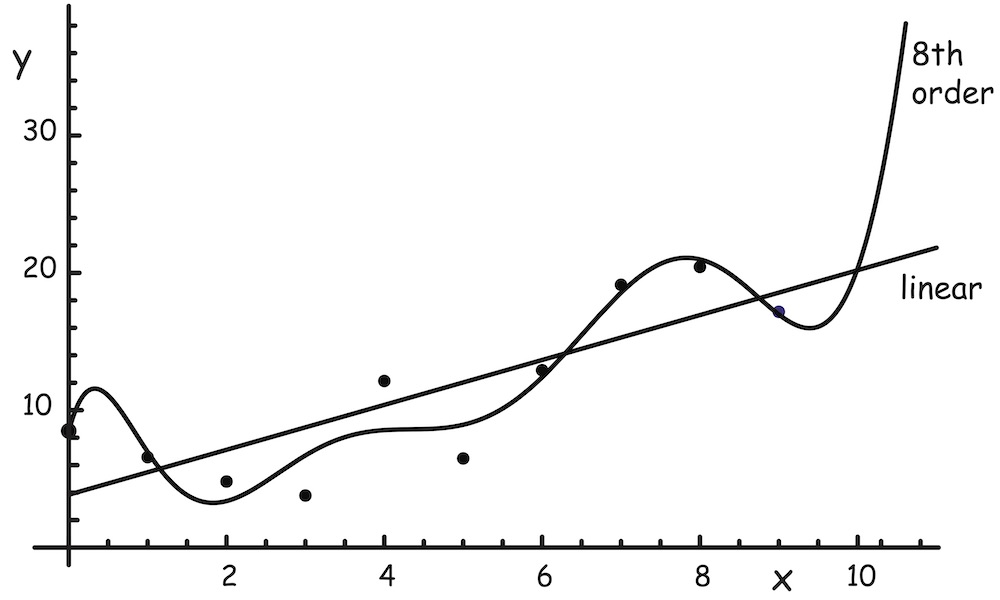

Regression

analysis seeks a function from x to y, where the function is

specified by parameters ai

Linear: y = a0 + a1x

8th order: y = a0 + a1x+ ... + + a8x8

|

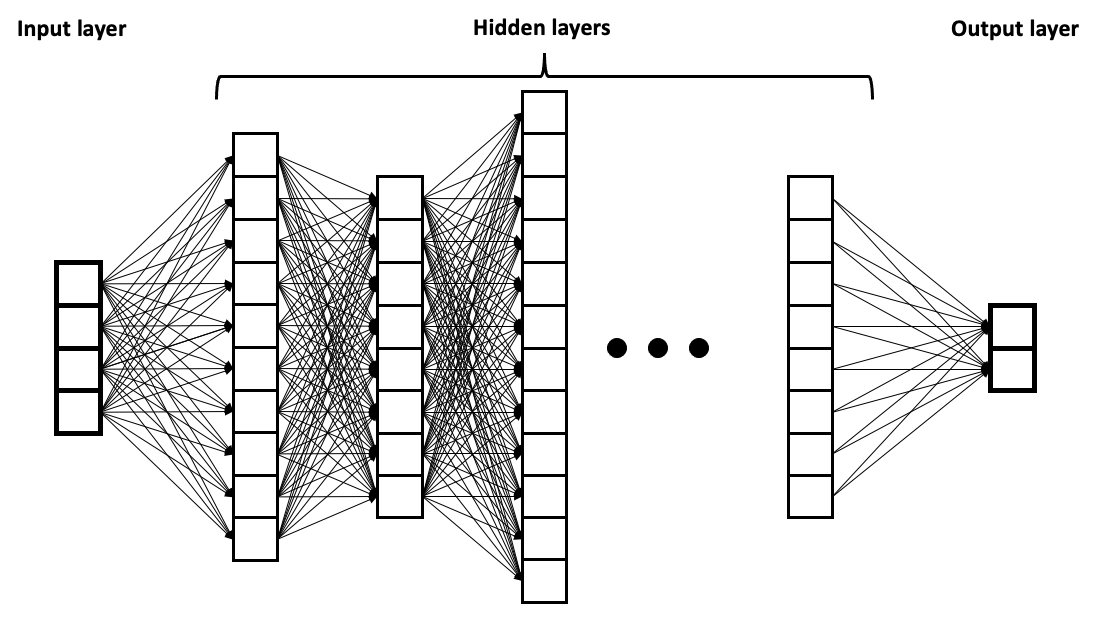

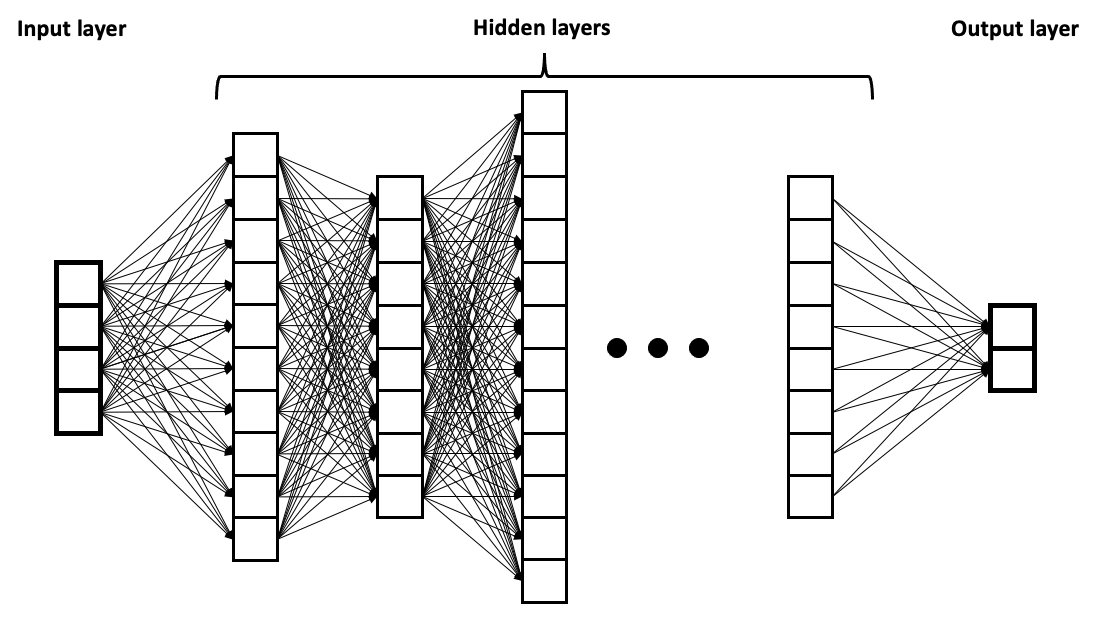

Neural

network analysis seeks a function from the variables in the input

layer to the variables in the output layer.

Variables xi in each layer are related to the variables yi

in the next layer by

yi = (normalizing factor) (w1x1 + w2x2

+ ...), for each i.

The concatenation of all these interlayer functions is the overall

function sought. |

The function is

adapted to a data set

{<x1, y1>, <x2, y2>,

<x3, y3>,...} |

The function is

adapted to "training" data by "back propagation" methods.

|

The

function chosen is the one that minimizes the difference between the

computed values of y and the values of y in the data set.

Minimization of sum of squared differences is standard.

|

They

search for the set of weights wi that minimizes the

difference between the function output and the training data output.

Minimization of sum of squared differences is standard.

|

Philosophers of science on machine learning

Celebratory:

"Philosophy

of Science meets Machine Learning," Conference Program, 2021,

"Machine learning (ML) does not only transform businesses

and the social sphere, it also fundamentally transforms science and

scientific practice."

Critical:

Suzanne Kawamleh, "Can

Machines Learn How Clouds Work? The Epistemic Implications of

Machine Learning Methods in Climate Science," Philosophy of Science,

88 (December 2021) pp. 1008–1020.

"...Scientists are now replacing physically based

parameterizations with neural networks that do not represent physical

processes directly or indirectly. I analyze the epistemic implications of

this method and argue that it undermines the reliability of model

predictions. I attribute the widespread failure in neural network

generalizability to the lack of process representation. The representation

of climate processes adds significant and irreducible value to the

reliability of climate model predictions."

Emily Sullivan, "Understanding

from Machine Learning Models," The British Journal for the

Philosophy of Science, 73, number 1, March 2022.

"...In this article, using the case of deep neural

networks, I argue that it is not the complexity or black box nature of a

model that limits how much understanding the model provides. Instead, it

is a lack of scientific and empirical evidence supporting the link that

connects a model to the target phenomenon that primarily prohibits

understanding."

David S. Watson, "Conceptual

challenges for interpretable machine learning," Synthese

(2022) 200:65

"... I argue that the vast majority of IML [interpretable

machine learning] algorithms are plagued by

(1) ambiguity with respect to their true target;

(2) a disregard for error rates and severe testing; and

(3) an emphasis on product over process.

... failure to acknowledge these problems can result in counterintuitive

and potentially misleading explanations. Without greater care for the

conceptual foundations of IML, future work in this area is doomed to

repeat the same mistakes.

Deflationary:

Julie Jebeile, Vincent Lam,Tim Räz, "Understanding

climate change with statistical downscaling and machine learning," Synthese

(2021) 199:1877–1897

"... compare how machine learning and standard statistical

techniques affect our ability to understand the climate system. For that

purpose, we put five evaluative criteria of understanding to work:

intelligibility, representational accuracy, empirical accuracy, coherence

with background knowledge, and assessment of the domain of validity.

We argue that the two families of methods are part of the same continuum

where these various criteria of understanding come in degrees, and that

therefore machine learning methods do not necessarily constitute a radical

departure from standard statistical tools, as far as understanding is

concerned."

More Examples in Science

Boyuan Chen et al., "Discovering

State Variables Hidden in Experimental Data"

arXiv:2112.10755v1 [math.DS]

"... using video recordings of a variety of physical

dynamical systems, ranging from elastic double pendulums to fire flames.

Without any prior knowledge of the underlying physics, our algorithm

discovers the intrinsic dimension of the observed dynamics and identifies

candidate sets of state variables. We suggest that this approach could

help catalyze the understanding, prediction and control of increasingly

complex systems."

Grey S. Nearing et al. "What

Role Does Hydrological Science Play in the Age of Machine Learning?"

Water Resources Research, 57, e2020WR028091.

"However, with the accelerating development of modern

machine learning (ML) and deep learning (DL) in particular, we know that

the reason is the third one listed: watershed‐scale theories (and models)

could have been derived from currently available observation data, but the

hydrology community simply failed to do so."

"... DL [deep learning] learned to predict in unseen basins better than

traditional models..."

This particular paper is remarkable for its heavy

engagement with our history and philosophy of science literature:

"On April 27, 1900 William Thomson (Lord Kelvin) gave his

“Two Clouds” speech (“Nineteenth‐Century Clouds over the Dynamical Theory

of Heat and Light”) at the Royal Institution,..."

"In 1987, Keith Beven gave what might be considered hydrology's version of

the Two Clouds speech at a symposium of the International Association of

Hydrological Sciences (IAHS) (Beven, 1987). He took a perspective inspired

by Thomas Kuhn's theory of scientific revolutions (Kuhn, 1962) ... He

proposed that two things would be necessary to push the field of surface

hydrology into a new period of “normal science”..."

"As an applied science, hydrology is motivated by both epistm

and techn..."

"This is the problem of holist underdetermination (Duhem, 1954; Laudan,

1990), whereby auxiliary hypotheses confound the ability to falsify

specific hypothesis."

"Cartwright and McMullin (1984) argued that phenomenological laws not

theoretical laws—are the only thing that can actually be tested."

Algorithmic Bias

Topic caution: "low hanging fruit"

This aspect of the modern reliance on algorithmic

techniques has attracted more attention, I believe, than other problems.

See, for example:

Sina Fazelpour and David Danks, "Algorithmic

bias: Senses, sources, solutions," Philosophy Compass.

2021;16:e12760.

"Data‐driven algorithms are widely used to make or assist

decisions in sensitive domains, including healthcare, social services,

education, hiring, and criminal justice. In various cases, such algorithms

have preserved or even exacerbated biases against vulnerable communities,

sparking a vibrant field of research focused on so‐called algorithmic

biases."

Jeffrey Dastin, "Amazon

scraps secret AI recruiting tool that showed bias against women," Reuters,

2018 or here.

"Amazon.com Inc's AMZN.O machine-learning specialists

uncovered a big problem: their new recruiting engine did not like

women..."